Scalability determines whether a SaaS startup thrives or encounters performance walls that stifle growth. Many founders optimize for speed to market, then face painful, expensive architectural redesigns when scaling demands exceed the system’s capacity. The cost difference is stark: building for scalability from inception adds 10-15% to initial development time but prevents costly re-engineering that can consume 3-6 months and 50-70% of an engineering team’s capacity.

This isn’t academic—successful SaaS platforms prove that architecture decisions made early compound throughout the company’s lifecycle. Salesforce handles 150 billion transactions daily across millions of tenants through sophisticated sharding, while Shopify manages Black Friday traffic surges 5x normal volume through architecture designed for horizontal scaling. These capabilities weren’t afterthoughts; they were architectural foundations established when neither company served millions of customers.

The distinction between scaling reactively and proactively is the difference between managing growth and being managed by it. This guide outlines the essential architectural decisions and implementation strategies enabling SaaS platforms to scale smoothly from MVP through enterprise scale.

Foundation 1: Multi-Tenancy Architecture—The Economic Multiplier

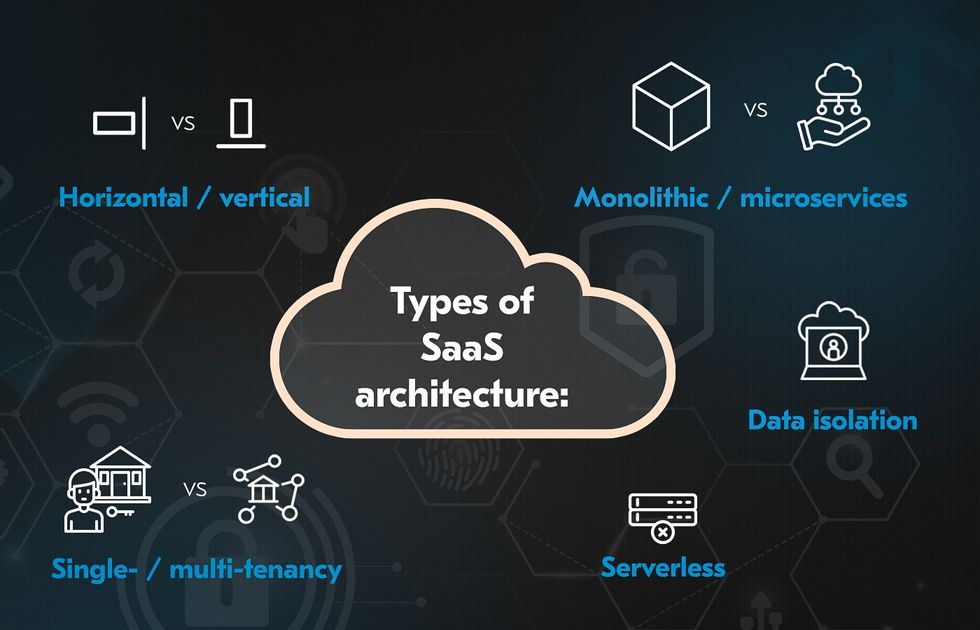

Multi-tenancy represents the defining architectural choice for SaaS platforms. Rather than provisioning separate infrastructure for each customer, multi-tenancy shares infrastructure across tenants while maintaining strict data isolation. This approach is foundational to SaaS business models, enabling unit economics where customers gain value and companies remain profitable.

The Multi-Tenancy Spectrum

Multi-tenancy exists on a spectrum, each with distinct tradeoffs:

Shared Everything (Single Database): All tenants share the same application instance and database. This maximizes cost efficiency—developers maintain one codebase, deploy once, and server resources are shared fully across tenants. However, this approach requires robust isolation at the application layer and becomes complex if tenants need customization or different database schemas. Isolation failures risk data leaks, making this approach suitable primarily for early-stage startups serving homogeneous customer bases.

Shared Application, Isolated Databases: Tenants share the application instance but each maintains a dedicated database. This approach balances cost efficiency with security. Shared infrastructure reduces deployment complexity, while isolated databases prevent cross-tenant data access and enable per-tenant backups and restoration. However, database provisioning must be fully automated, and managing hundreds of databases introduces operational complexity.

Single-Tenant Architecture: Each customer receives completely isolated infrastructure including application instances and databases. This maximizes customization and security but sacrifices cost efficiency—organizations cannot amortize infrastructure costs across customers. Single-tenancy is appropriate for enterprise deals with stringent compliance requirements, dedicated performance SLAs, or extreme customization demands.

Hybrid Approach: Many SaaS platforms implement hybrid strategies where standard customers operate in multi-tenant shared infrastructure, while enterprise customers with specific requirements receive dedicated single-tenant instances. This approach captures SMB cost efficiency while enabling enterprise revenue.

Implementation Discipline: Tenancy from Day One

Regardless of chosen model, the critical practice is embedding tenant awareness into every architectural component from inception. Every data model, API, cache key, and logging entry must tag tenant context. Developers must assume tenant isolation through design, not retrofit it later.

Implementing this discipline means:

- Database Schema Design: Every table includes a

tenant_idcolumn used in all queries. Indexes includetenant_idto ensure queries cannot accidentally access cross-tenant data. - ORM and Query Patterns: Developers enforce tenant filtering at query execution, making isolation a default rather than an optional safeguard.

- Logging and Monitoring: All logs include tenant context so security audits and incident investigations can be scoped to specific tenants without accessing others’ data.

- API Design: All API endpoints enforce tenant context from authentication tokens, making cross-tenant access structurally impossible rather than relying on permission checks.

This discipline prevents the scenario where scaling pressures force urgent isolation retrofits costing months of engineering effort.

Foundation 2: Stateless Application Architecture—The Horizontal Scaling Enabler

The most impactful architectural decision for scalability is designing applications as stateless services. Stateless applications store no request-specific information in application memory—session data, user context, and request-specific state all reside in external systems (databases, caches, message queues). This design enables horizontal scaling: any application instance can handle any incoming request because no instance depends on having specific previous requests.

By contrast, stateful applications where instances maintain user sessions or request context require sticky load balancing—routing all requests from a specific user to the same instance. This creates bottlenecks and single points of failure. If an instance fails, all users with sessions on that instance lose their state.

Implementing Stateless Architecture:

Session Management: Store session data in Redis or database rather than application memory. Load balancers can route requests to any instance without session loss.

Request Context: Pass tenant IDs, user authentication tokens, and request context through HTTP headers or request bodies rather than storing them in application memory.

Caching Strategy: Use centralized caches (Redis, Memcached) rather than per-instance caches. Distributed caches remain consistent across instances and enable cache invalidation across all nodes simultaneously.

Long-Running Operations: Offload work exceeding a few seconds to background job queues (Kafka, RabbitMQ). Applications return immediately with a job reference, then notify clients via webhook when work completes.

This stateless design enables the horizontal scaling pictured in successful SaaS platforms. As traffic increases, organizations add additional application instances; all receive equal traffic through load balancing. Without stateless design, horizontal scaling provides diminishing returns because of sticky session overhead.

Foundation 3: Multi-Layer Caching—The Performance Multiplier

Caching at multiple layers dramatically improves performance while enabling application scalability. Without strategic caching, database load becomes the primary scalability bottleneck.

Caching Layers Include:

API Gateway Caching: Content delivery networks and API gateways cache HTTP responses at the edge, closest to users. Requests for the same endpoint with identical parameters return cached responses without reaching application servers. This layer particularly benefits read-heavy operations and static content.

Application-Level Caching: Applications cache expensive computation results, database queries, and frequently accessed data in in-memory stores like Redis. For example, user profile data accessed on every request might be cached rather than queried from the database on each request.

Database Caching: Databases themselves employ read replicas and query result caching. Read-heavy operations are routed to replicas, preventing write nodes from becoming bottlenecks.

Effective Caching Strategies:

Cache invalidation remains one of computing’s hardest problems. Consider these approaches:

- Time-based expiration: Cache entries automatically expire after a defined interval (e.g., 5 minutes). Effective for data where slight staleness is acceptable.

- Event-based invalidation: When underlying data changes, cache entries are explicitly invalidated. More complex to implement but ensures freshness.

- Write-through caching: Applications write to cache before database, ensuring cache always contains the most current data.

The tradeoff is between freshness and performance. Aggressive caching maximizes performance but risks stale data. Conservative caching ensures freshness but provides less scalability benefit.

Foundation 4: Database Scalability—Conquering the Bottleneck

Databases typically become scalability bottlenecks before application servers do. While applications scale horizontally through adding instances, databases cannot simply be replicated without addressing consistency issues. Strategic database scaling requires multiple complementary approaches.

Read Replicas: Replicate the primary database to secondary read-only instances. Query operations route to replicas, write operations go to the primary, and replication synchronizes changes. This approach works well for read-heavy workloads (common in SaaS) but introduces complexity managing replication lag.

Caching Layers: Rather than querying databases repeatedly for identical data, cache frequently accessed data in memory (Redis, Memcached). For example, caching user profiles, product catalogs, or configuration data reduces database queries by 50-80%.

Database Partitioning (within single database): Partition tables by logical criteria (date ranges, geographic regions, customer segments) so queries only scan relevant partitions rather than entire tables. This approach accelerates queries on large tables without requiring multiple database instances.

Database Sharding (across multiple databases): Distribute data across multiple independent database instances, each handling specific tenant subsets or data ranges. Sharding enables horizontal database scaling but introduces complexity—applications must route queries to appropriate shards, and managing cross-shard transactions becomes complicated.

Sharding Strategies Include:

- Tenant-Based Sharding: Each shard contains data for specific tenants. Route all queries for tenant ID 1-1000 to shard 1, tenant ID 1001-2000 to shard 2, etc. Provides clear isolation but creates uneven load if some tenants are much larger than others.

- Range-Based Sharding: Shard by data ranges (by date, geographic region, or other attributes). Enables efficient range queries but can create hot spots if recent data receives most queries.

- Hash-Based Sharding: Hash the partition key to determine shard. Distributes data evenly but makes range queries complicated (querying date ranges might touch every shard).

89% of SaaS companies successfully scaling beyond $100M annual recurring revenue (ARR) implement database sharding. For early-stage startups, sharding represents premature complexity—optimize read replicas and caching first. However, early attention to database design (ensuring tenant_id appears in all relevant schemas, using proper indexes) prevents painful re-architecture when sharding becomes necessary.

Foundation 5: API Design for Scalability—The Integration Layer

APIs represent the primary integration point between SaaS platforms and customers. Scalable API design ensures backend changes don’t require customer integration updates, and API performance doesn’t become a limiting factor.

Key Principles:

Stateless Design: APIs should not store request state in memory. Each request should be independently processable by any server instance.

Pagination and Filtering: APIs returning large datasets should support pagination to prevent clients from pulling excessive data. Implement filtering to reduce result sets. Without pagination, clients for large datasets become a primary resource consumer.

Rate Limiting: Implement API rate limiting to prevent individual clients from consuming disproportionate resources. Rate limiting protects shared infrastructure and ensures fair resource allocation.

Asynchronous Patterns: For long-running operations, return immediately with an operation ID and provide webhooks for completion notifications. This keeps APIs responsive and prevents timeout issues.

Versioning Strategy: Design APIs enabling evolution without breaking existing clients. Version APIs explicitly (e.g., /api/v2/customers) so customers can migrate at their pace.

Monitoring and Analytics: Instrument APIs with comprehensive metrics measuring response times, error rates, and throughput. Identify bottlenecks before they impact customers.

Foundation 6: Infrastructure Architecture—Cloud-Native Approach

Modern SaaS platforms leverage cloud infrastructure specifically because it enables automatic scaling. Rather than managing physical servers, cloud providers abstract infrastructure through services enabling elastic scaling.

Recommended Cloud Infrastructure Components:

Load Balancers: Distribute incoming traffic across multiple application instances. Cloud-native load balancers (AWS Application Load Balancer, Google Load Balancer) handle sticky session management, SSL termination, and health checking automatically.

Auto-Scaling Groups: Configure applications to automatically add instances when CPU exceeds thresholds and remove instances when demand decreases. This automation ensures infrastructure matches demand without manual intervention.

Container Orchestration: Deploy applications as containers (Docker) orchestrated by Kubernetes or equivalent platforms. Containers encapsulate application code and dependencies, making deployments consistent across environments. Orchestration platforms automatically manage container placement, scaling, and resource allocation.

Managed Databases: Leverage cloud-managed databases (AWS RDS, Google Cloud SQL) rather than self-managing database infrastructure. Managed services handle backups, replication, failover, and patching automatically.

Object Storage: Store customer-generated content (images, documents, uploads) in object storage (S3, Azure Blob Storage) rather than filesystem storage. Object storage scales massively and provides built-in redundancy and availability.

Content Delivery Networks: Cache static content (CSS, JavaScript, images) in CDNs positioned globally. CDNs serve content from locations nearest to users, dramatically reducing latency.

Message Queues: For asynchronous processing, deploy message queues (Kafka, RabbitMQ, AWS SQS) decoupling request handling from background job processing. Requests queue jobs, applications process them asynchronously, and clients receive notifications upon completion.

Foundation 7: Microservices Architecture—Scalability Through Decomposition

As SaaS platforms grow, monolithic architectures where all functionality resides in single applications become bottlenecks. Microservices architecture decomposes applications into smaller, independently deployable services each handling specific functions.

Microservices Benefits for Scaling:

- Individual services scale based on actual demand—if authentication becomes a bottleneck, deploy additional authentication service instances without scaling entire application.

- Services can be built with different technology stacks optimized for specific functions (authentication service might use Node.js for rapid development, while computational services use Go for performance).

- Teams work independently on separate services, removing coordination bottlenecks in large organizations.

Microservices Complexity:

Microservices introduce distributed systems complexity. Services must communicate reliably despite network failures. Transactions spanning multiple services become complicated. Debugging issues spanning multiple services is difficult. For early-stage startups with small teams, microservices typically introduce unnecessary complexity.

Implementation Approach:

Start with monolithic architecture but design it for future decomposition—clean module boundaries, clear internal APIs between components, well-defined data ownership. As the platform grows and specific services become bottlenecks, extract them into dedicated microservices.

Use common microservices design patterns:

API Gateway Pattern: Single entry point routing requests to appropriate microservices.

Database per Service Pattern: Each microservice maintains its own database, preventing shared database coupling.

Circuit Breaker Pattern: Prevent cascading failures when services become unavailable through circuit breakers stopping calls to failing services.

Saga Pattern: Manage distributed transactions across multiple services using coordinated local transactions with compensation mechanisms for failures.

Foundation 8: Monitoring and Observability—Seeing System Health

Building scalable systems requires comprehensive visibility into system behavior. Without observability, optimization becomes guesswork.

Essential Observability Components:

Metrics: Numerical measurements of system behavior—response times, error rates, resource utilization, throughput. Store metrics in time-series databases (Prometheus, InfluxDB) enabling trend analysis.

Logs: Detailed records of events and activities. Centralize logs from all services into searchable repositories (ELK stack, Splunk) enabling incident investigation.

Traces: Follow individual requests through their entire journey across services. Distributed tracing shows which services each request touches and where latency occurs.

Observability Practices:

- Instrument Everything: Measure response times, error rates, and business metrics throughout the application. Without instrumentation, performance problems remain invisible until customers complain.

- Set Meaningful Alerts: Configure alerts for anomalies suggesting problems—error rate spikes, response time increases, database connection pool exhaustion.

- Correlate Metrics: When issues occur, correlate metrics to identify root causes. Performance degradation might stem from increased database latency, which might stem from cache invalidation, which might stem from a recent code change.

- Capacity Planning: Analyze historical metrics to project future resource needs. If database CPU grows 10% monthly, predict when current capacity becomes insufficient and provision proactively.

Foundation 9: Deployment Architecture—Continuous Delivery at Scale

Scalable systems require frequent, safe deployments enabling rapid iteration. Manual deployments become bottlenecks as teams grow.

CI/CD Pipeline: Automate building, testing, and deploying code. Every code commit triggers automated tests; passing tests automatically deploy to staging environments; teams then promote to production through manual gates.

Blue-Green Deployment: Maintain two production environments (Blue and Green). Deploy new versions to the idle environment, test thoroughly, then switch traffic from Blue to Green. If issues emerge, switch back instantly.

Infrastructure as Code: Define infrastructure (servers, databases, networks) as code in version control. This enables reproducible deployments and rapid environment provisioning.

Automated Scaling Policies: Define scaling rules upfront—add instances when CPU exceeds 70%, remove instances when CPU drops below 30%. This automation responds to load instantly.

Foundation 10: Security-First Architecture—Building Trust from Day One

Security retrofitting proves vastly more expensive than embedding security from inception. Build security into architecture rather than bolting it on later.

Non-Negotiable Security Elements:

- Encryption: Encrypt data at rest (AES-256) and in transit (TLS 1.3).

- Authentication: Implement OAuth 2.0 or similar standards for secure, scalable authentication.

- Authorization: Role-based access control ensures users access only their data.

- Audit Logging: Every action creates audit trails enabling investigation of security incidents.

- Secrets Management: Store API keys, database credentials, and other secrets in dedicated secret management systems (AWS Secrets Manager, HashiCorp Vault), never in code.

- Data Isolation: Enforce strict data isolation at all layers—database, cache, logs, and API responses.

Getting Started: Pragmatic Progression

Building fully scalable architecture from day one risks over-engineering for problems that may never emerge. Pragmatic approaches balance scalability with time-to-market:

Phase 1 (MVP Stage): Build monolithic architecture with multi-tenant data isolation and stateless application design. Implement basic monitoring. Focus on getting to market.

Phase 2 (Product-Market Fit): Add caching layers and read replicas as database queries become bottlenecks. Implement comprehensive monitoring. Establish CI/CD practices enabling rapid iteration.

Phase 3 (Scale Stage): Implement database sharding if tenant growth requires it. Extract services with distinct scaling requirements into microservices. Expand observability across distributed architecture.

Phase 4 (Enterprise Stage): Implement advanced patterns—event sourcing, saga transactions, stream processing. Optimize for specific customer workloads.

This progression ensures technical decisions match actual problems rather than anticipated ones.

Building scalable SaaS architecture from day one reflects a strategic mindset—making decisions enabling future growth without unnecessary upfront complexity. The architectural foundations matter most: multi-tenancy designed for scale, stateless applications enabling horizontal scaling, strategic caching multiplying performance, databases planned for sharding, APIs designed for evolution, cloud-native infrastructure, observability providing visibility, and security embedded by default.

Successfully scaled SaaS platforms like Salesforce, Shopify, and Slack didn’t achieve their scale through heroic engineering efforts late in development. They built foundations enabling efficient scaling. These foundations—multi-tenancy, statelessness, caching, database strategy—compound over time, transforming what could have been architectural limitations into engines of growth.

For founders building SaaS companies in 2025, the competitive advantage flows to those making architectural decisions early that prevent the costly re-engineering plagues so many successful startups. Build for scale not through premature optimization, but through thoughtful foundational choices enabling growth without rewriting core infrastructure. This discipline transforms scaling from crisis management into planned progression, enabling SaaS companies to grow revenue exponentially without growing engineering burden proportionally.